Optimizing compiler. Auto parallelization

Multicore and multiprocessor is de facto standard

The presentation can be downloaded here.

Intel® Pentium® Processor Extreme Edition (2005-2007)

Intel® Xeon® Processor 5100, 5300 Series

Intel® Core™2 Processor Family (2006-)

Intel® Xeon® Processor 5200, 5400, 7400 Series

Intel® Core™2 Processor Family (2007-)

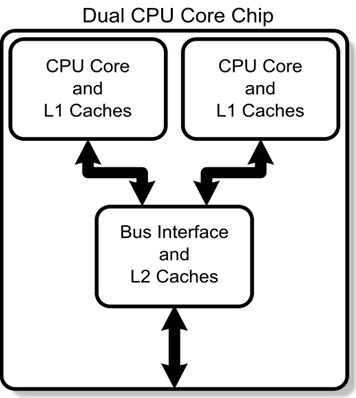

2-4 processors, up to 6 cores

Intel® Atom™ Processor Family (2008-)

Energy Efficiency has high priority

Intel® Core™i7 Processor Family (2008-)

Hyperthreading technology. System with non-uniform memory access.

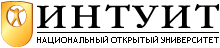

CPU core is a complete computer system that shares some of the processing resources with other cores

Multiprocessor systems are

- Massively parallel computers or systems with distributed memory (MPP systems).

- Shared memory systems (SMP systems)

- Systems with non-uniform memory access (NUMA)

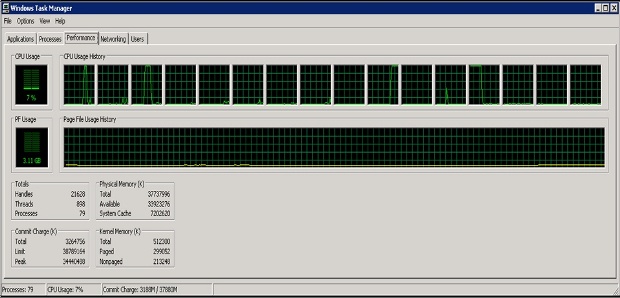

Instability of the application performance on multiprocessor machines with non-uniform memory access.

OS periodically moves application from one core to another with different access speed to the memory used.

Pros and cons of the multi-threaded applications

- + +:

- - -:

- Conclusion:

Auto parallelization is a compiler optimization which automatically converts sequential code into multi-threaded in order to utilize multiple cores simultaneously. The purpose of the automatic parallelization is to free programmer from the difficult and tedious manual parallelization.

/Qparallel

enable the auto-parallelization to generate multi-threaded code for loops that can be safely executed in parallel