The Vinum Volume Manager

Vinum is a Volume Manager, a virtual disk driver that addresses these three issues:

From a user viewpoint, Vinum looks almost exactly the same as a disk, but in addition to the disks there is a maintenance program.

Vinum objects

Vinum implements a four-level hierarchy of objects:

- The most visible object is the virtual disk, called a volume. Volumes have essentially the same properties as a UNIX disk drive, though there are some minor differences. They have no size limitations.

- Volumes are composed of plexes, each of which represents the total address space of a volume. This level in the hierarchy thus provides redundancy. Think of plexes as individual disks in a mirrored array, each containing the same data.

- Vinum exists within the UNIX disk storage framework, so it would be possible to use UNIX partitions as the building block for multi-disk plexes, but in fact this turns out to be too inflexible: UNIX disks can have only a limited number of partitions. Instead, Vinum subdivides a single UNIX partition (the drive) into contiguous areas called subdisks, which it uses as building blocks for plexes.

- Subdisks reside on Vinum drives, currently UNIX partitions. Vinum drives can contain any number of subdisks. With the exception of a small area at the beginning of the drive, which is used for storing configuration and state information, the entire drive is available for data storage.

Plexes can include multiple subdisks spread overall drives in the Vinum configuration, so the size of an individual drive does not limit the size of a plex, and thus of a volume.

Mapping disk space to plexes

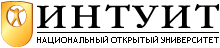

The way the data is shared across the drives has a strong influence on performance. It's convenient to think of the disk storage as a large number of data sectors that are addressable by number, rather like the pages in a book. The most obvious method is to divide the virtual disk into groups of consecutive sectors the size of the individual physical disks and store them in this manner, rather like the way a large encyclopaedia is divided into a number of volumes. This method is called concatenation, and sometimes JBOD (Just a Bunch Of Disks). It works well when the access to the virtual disk is spread evenly about its address space. When access is concentrated on a smaller area, the improvement is less marked. Figure 12-1 illustrates the sequence in which storage units are allocated in a concatenated organization.

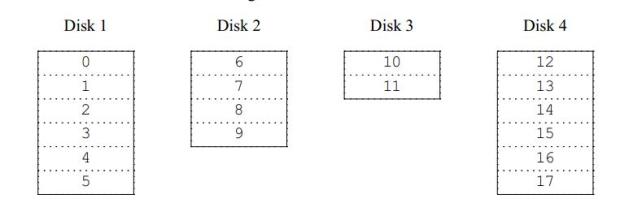

An alternative mapping is to divide the address space into smaller, equal-sized components, called stripes, and store them sequentially on different devices. For example, the first stripe of 292 kB may be stored on the first disk, the next stripe on the next disk and so on. After filling the last disk, the process repeats until the disks are full. This mapping is called striping or RAID-01RAID stands for Redundant Array of Inexpensive Disks and offers various forms of fault tolerance, though the latter term is somewhat misleading: it provides no redundancy. Striping requires somewhat more effort to locate the data, and it can cause additional I/O load where a transfer is spread over multiple disks, but it can also provide a more constant load across the disks. Figure 12-2 illustrates the sequence in which storage units are allocated in a striped organization.

Data integrity

Vinum offers two forms of redundant data storage aimed at surviving hardware failure: mirroring, also known as RAID level1, and parity, also known as RAID levels 2 to 5.

Mirroring maintains two or more copies of the data on different physical hardware. Any write to the volume writes to both locations; a read can be satisfied from either, so if one drive fails, the data is still available on the other drive. It has two problems:

- The price. It requires twice as much disk storage as a non-redundant solution.

- The performance impact. Writes must be performed to both drives, so they take up twice the bandwidth of a non-mirrored volume. Reads do not suffer from a performance penalty: you only need to read from one of the disks, so in some cases, they can even be faster.

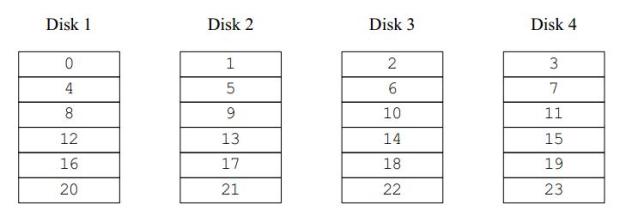

The most interesting of the parity solutions is RAID level5, usually called RAID-5. The disk layout is similar to striped organization, except that one block in each stripe contains the parity of the remaining blocks. The location of the parity block changes from one stripe to the next to balance the load on the drives. If anyone drive fails, the driver can reconstruct the data with the help of the parity information. If one drive fails, the array continues to operate in degraded mode: a read from one of the remaining accessible drives continues normally, but a read request from the failed drive is satisfied by recalculating the contents from all the remaining drives. Writes simply ignore the dead drive. When the drive is replaced, Vinum recalculates the contents and writes them back to the new drive.

In the following figure, the numbers in the data blocks indicate the relative block numbers.

Compared to mirroring, RAID-5 has the advantage of requiring significantly less storage space. Read access is similar to that of striped organizations, but write access is significantly slower, approximately 25% of the read performance.

Vinum also offers RAID-4, a simpler variant of RAID-5 which stores all the parity blocks on one disk. This makes the parity disk a bottleneck when writing. RAID-4 offers no advantages overRAID-5, so it's effectively useless.

Which plex organization?

Each plex organization has its unique advantages:

- Concatenated plexes are the most flexible: they can contain any number of subdisks, and the subdisks may be of different length. The plex may be extended by adding additional subdisks. They require less CPU time than striped or RAID-5 plexes, though the difference in CPU overhead from striped plexes is not measurable. They are the only kind of plex that can be extended in size without loss of data.

- The greatest advantage of striped (RAID-0) plexes is that they reduce hot spots: by choosing an optimum sized stripe (between 256 and 512 kB), you can even out the load on the component drives. The disadvantage of this approach is the restriction on subdisks, which must be all the same size. Extending a striped plex by adding new subdisks is so complicated that Vinum currently does not implement it. A striped plex must have at least two subdisks: otherwise it is indistinguishable from a concatenated plex. In addition, there's an interaction between the geometry of UFS and Vinum that makes it advisable not to have a stripe size that is a power of 2: that's the background for the mention of a 292 kB stripe size in the example above.

- RAID-5 plexes are effectively an extension of striped plexes. Compared to striped plexes, they offer the advantage of fault tolerance, but the disadvantages of somewhat higher storage cost and significantly worse write performance. Like striped plexes, RAID-5 plexes must have equal-sized subdisks and cannot currently be extended. Vinum enforces a minimum of three subdisks for a RAID-5 plex: any smaller number would not make any sense.

- Vinum also offers RAID-4, although this organization has some disadvantages and no advantages when compared to RAID-5. The only reason for including this feature was that it was a trivial addition: it required only two lines of code.

The following table summarizes the advantages and disadvantages of each plex organization.